Throw out your touchscreens, kibosh your Kinects: thought-controlled computing is the new new thing. Brain-computer interface technology has been simmering for years, and seems finally ready to bubble out of research labs and into the real world.

Earlier this year, friends of mine at the Toronto art space Site3 built a thought-controlled flamethrower, for fun. (Don’t you hate how it’s always the friends you least want to have the power to project torrents of flame with a flick of their mind who always get it?) Toronto has long been a hub for brain computing, in part because legendary cyborg Steve Mann is a University of Toronto engineering professor. Mann also cofounded the thought-controlled computing consultancy InteraXon, which built the neural installation at this year’s Olympics.

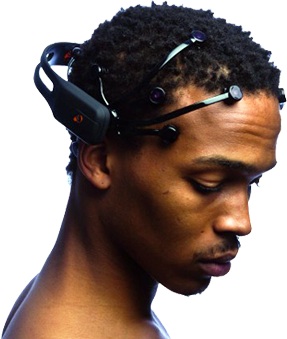

Both InteraXon and my pyromaniacal friends use brainwave-reading headsets made by Neurosky (whose promise was noted by TechCrunch five years ago) and Emotiv. Today’s sets handle much more than mere alpha/beta wave measurement: Emotiv’s, in particular, can track eye motion, facial expressions, emotional state, and even directional thoughts.

The potential applications go way beyond flambés. Ariel Garten, InteraXon’s CEO, ticks off a laundry list that includes advance warning of epileptic seizures, headset-controlled airline entertainment systems, and a company that approached her hoping to build a thought-controlled welding system. Meanwhile, Columbia University’s Paul Sajda has scored $4.6 million from the Department of Defence for his EEG cap and machine-learning algorithms used to improve image recognition and classification.

Gaming is also a big market (making the Kinect seem so five minutes ago) but the ability to connect neural headsets and mobile devices is even more interesting. Garten—who will be speaking at Le Web next week, and at CES in January—sketches a compelling vision of stylish headsets growing more common than Bluetooth earpieces today, and their users interacting with phones, kiosks, and other devices without so much as twitching a lip or finger.

InteraXon, which is self-funded and profitable, already connects neural headsets to iOS devices over Wi-Fi and Bluetooth. Both Neurosky and Emotiv have made SDKs available for developers, and have app stores up and running. Their futures look ripe with potential—until and unless someone like Apple decides to play in this space. iMind, anyone?

We’re still a long way from real wetware (direct brain-computer connections) . . . but last week an NYU professor had a digital camera implanted in his head. It’ll be many years (if ever) before that goes mainstream, but the line between the mind and its tech is growing finer. “It can be a transformational experience,” Garten says, of the moment users first don a headset. “For the first time, you’re consciously interacting with your own brain.”

See all

See all

Glad you liked it. Would you like to share?

Thanks! Close

Showing 30 comments

Add New Comment

Optional: Login below.